Understanding Audio Tokens and Their Role in TTS

In our previous blog, we explored the concept of audio tokenizers and the process behind building them. Today, we’re diving deeper into how these tokens are used to train TTS and end-to-end audio models.

Audio Modeling with Tokens

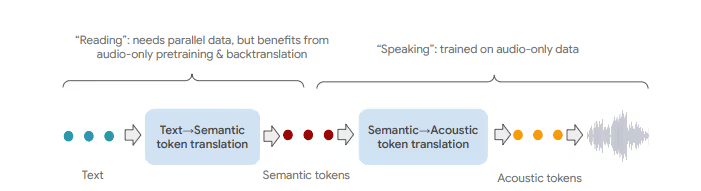

As we’ve previously discussed, semantic tokens represent how something is read, while acoustic tokens capture how it’s spoken. Since transformers excel at modeling sequences, and tokens form sequences, the natural next step is to use a transformer for audio tokens.

When we train a transformer on only acoustic tokens, the result captures the sound of human speech but misses the linguistic meaning. Here’s an example:

On the other hand, training on semantic tokens results in the model capturing some language understanding, though it lacks the richness of natural speech:

How TTS Works with Tokens

Since semantic tokens are closer to how we process language, they are crucial for building a TTS system. The TTS system works by converting text tokens into acoustic tokens through two stages:

A transformer first models text-to-semantic tokens, learning how a certain piece of text should be read. For example, it would learn that the words “put” and “but” are read differently.

Another transformer then takes these semantic tokens and converts them into acoustic tokens to generate the speech.

However, semantic tokens alone don’t capture any voice characteristics like pitch, tone, or gender. This results in different voices being generated in each run of the TTS model.

Here’s the first run:

Here’s the second run:

As you can hear, each run generates different voices due to the randomness in how the acoustic characteristics are assigned.

Generating TTS in a Desired Voice

To get the TTS to generate speech in a specific voice, we first convert a sample of the desired voice into acoustic tokens. These tokens capture the unique characteristics of the voice, which can then be added as a “prompt” to the second transformer.

This allows the model to generate speech in the desired voice consistently.

Here’s a voice sample:

Here’s the TTS-generated audio with that voice:

Training Data Challenges

To train a TTS model like this, you need large amounts of parallel text-to-audio data. Unfortunately, this kind of labeled data is hard to come by. However, we can leverage unlabelled audio data to pretrain the models. Large datasets of audio can generate many semantic tokens, which helps reduce the amount of parallel data needed for training a good TTS.

Expanding to End-to-End Audio Models

Large language models (LLMs) like LLaMA have shown great success in modeling text. But can this success be applied to audio? The challenge is that LLMs only understand text tokens. To train them on audio, we must first expand the model’s vocabulary to include audio tokens.

LLMs have an embedding layer that maps tokens into vectors. By introducing audio tokens into this layer and training the model on data containing both text and audio, we can teach it to process audio just like text.

E.g. we will train with examples like:

<In this audio clip how many times was the word LLM spoken>

This teaches the model to understand audio tokens and capture words from them.

Similarly:

<Sing ‘mary had a little lamb in opera voice’’>

This allows the model to understand and generate sequences of audio tokens, opening the door to more complex interactions.

Unlocking New Possibilities

By integrating text and audio in a single model, we can achieve more sophisticated outcomes than traditional ASR (Automatic Speech Recognition) or TTS systems. These models can understand not just speech, but other sounds too, like a cough, a knock on a table, or even emotional nuances in a voice.

This leads to a new level of conversational AI. With these end-to-end audio models, you’ll soon be able to interact with AI systems just like you would with a friend. These systems will not only respond in real-time but will also understand and replicate nuances in speech that go beyond words alone.